SITUATION #194

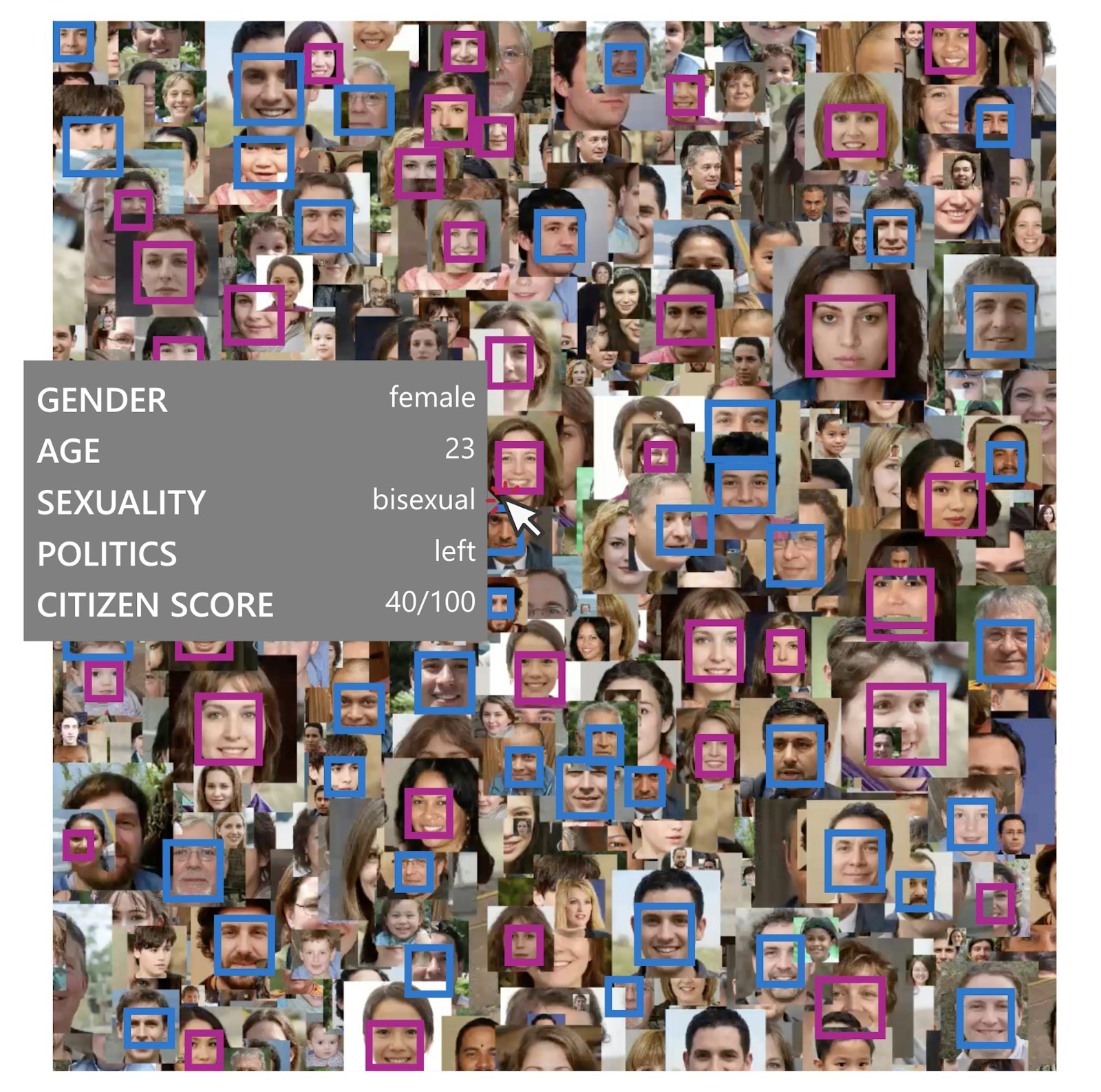

Autocratic governments have been pushing the limits of intelligent recognition software like never before. Many breakthroughs in A.I. now allow automated recognition to be scaled in real-time to every digital and physical footprint available. This leads to a whole new level of surveillance and state control. Not only can automated facial algorithms now determine the age and gender of a person in a matter of seconds, but they can also profile their sexual orientation and political views. The technology is already being used by law enforcement to recognise faces from the billions of scanned social media profile pictures. Social credit systems, currently being piloted in China, use facial recognition to assign each citizen automated scores, enabling certain rights depending on their score. We are witnessing an unprecedented privacy violation that is only just beginning.

In Face/Off Singularity, an online project commissioned by Fotomuseum for SITUATIONS/Deviant, David Dao provides an interactive web demo that educates users on the misuses of facial recognition technology. Furthermore, it displays current research to fight back automated algorithms. One promising technology is “adversarial perturbations”: changes to a user’s profile picture that are invisible to the human eye but not to a machine. It can be used to help hide a user’s identity from automated recognition. Face/Off Singularity provides a tool to experiment with ‘adversarial profile pictures’, educating on the science behind them.

Access Face/Off Singularity online here: awfulai.com

More by David Dao: daviddao.org

Cluster: Deviant