Picturing Computation

In my last post I suggested the screenshot exemplified the latest iteration of a much broader technique for the secondary mediation of an existing visual medium, one which has risen to prominence over the past forty years as part of the convergence of all visual media with the screens of modern computational devices. In this post I want to move away from broad generalizations and ask what the screenshot is as a material practice tied to computation as a distinct technical form. If, at its core, the screenshot is a picture of a computer screen, then it is through the screenshot that we might begin to examine the multiple and transforming ways we have pictured computation as a technical practice over the past seventy years. Asking how we picture computation here means both how we photograph computers as technical and commercial objects, but also how we visually document those cultures of use that are mediated through the screens of these machines. I will begin by describing the very first computer screens developed in the immediate postwar period, and the competing uses to which the screenshot was put both to document computation as a dynamic and interactive medium and to capture and fix computational images as ephemeral, impermanent, or otherwise invisible objects.

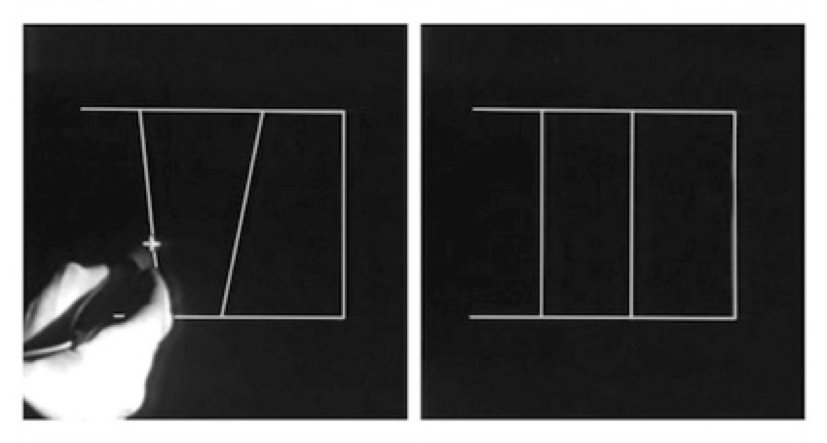

The digital screenshot as we know it today is a relatively recent invention. Prior to the 1990s there was no digital method for capturing the visual output of graphical systems. The image files and photo printers that make possible the vast majority of our contemporary computational images did not yet exist, such that it was necessary to photograph the surface of a screen with an analog camera in order to preserve the images it produced. 1There is a parallel tradition of paper plotters used as the output devices for early computer graphics, and indeed the vast majority of computational images in this early period were produced on paper as computer graphics and computer screens were experimental technologies that would not be formalized until the 1970s and 1980s. In this early period very few people had access to computers of any sort, and those computers that did exist were massive and wildly expensive devices used primarily for calculating numerical data on paper. One of the earliest research sites for interactive graphical computing was the Lincoln Laboratory of the Massachusetts Institute for Technology, whose one-of-a-kind TX-2 computer had been designed specifically to test the potential of graphical, interactive computing decades prior to the development of personal computers as we understand them today. In this period of computational scarcity screenshots fulfilled an explicit need: reproducing the visual output of interactive simulations such that they could be seen by those without access to the multi-million dollar research systems they required in order to run. As architectural historian Matthew Allen has argued, the screenshot in this early period was a tool for exemplifying the interactive potential of the graphical computer, and in this context takes on a number of semiotic conventions that signal what was, at that time, a novel use for computational machines. 2Matthew Allen, “Representing Computer-Aided Design: Screenshots and the Interactive Computer circa 1960”, in Perspectives on Science 24, no. 6 (2016): 637–668. Ironically what screenshots captured here was not stillness but interaction, that is, the ability to graphically communicate with a computer at a moment when its principal media were punch cards and magnetic tape. In many ways these images were responsible for shaping the technological imaginary of interactive computing that would evolve into the machines we use today – picturing computation as something more than a tool for the calculation of numerical data.

A second, somewhat contradictory technique in this period was the use of screenshots to fix and preserve complex objects that were otherwise impossible to view on the experimental screens of the time – namely the cathode ray oscilloscope and its calligraphic or random-access display tube. 3For a detailed discussion of these early screens, see Jacob Gaboury, “The Random-Access Image: Memory and the History of the Computer Screen”, in Grey Room no. 70 (Winter 2018): 24–53. Adapted in the immediate postwar period from radar screens and later repurposed from electronic test equipment, these “random” or “calligraphic” displays did not resemble the linear and uniform scan lines used by most all television screens built prior to the early 2000s. Instead the oscilloscope’s electron beam was designed to move along a curve, creating waveforms for use in electrical testing and calibration. When repurposed for early graphics the display could be programmed with a series of simple points in Cartesian space between which a line would be drawn. The images produced by these oscilloscopes are quite different from the pixel grid we associate with digital images and screens today, but for over thirty years these vector displays dominated the field of computer graphics.

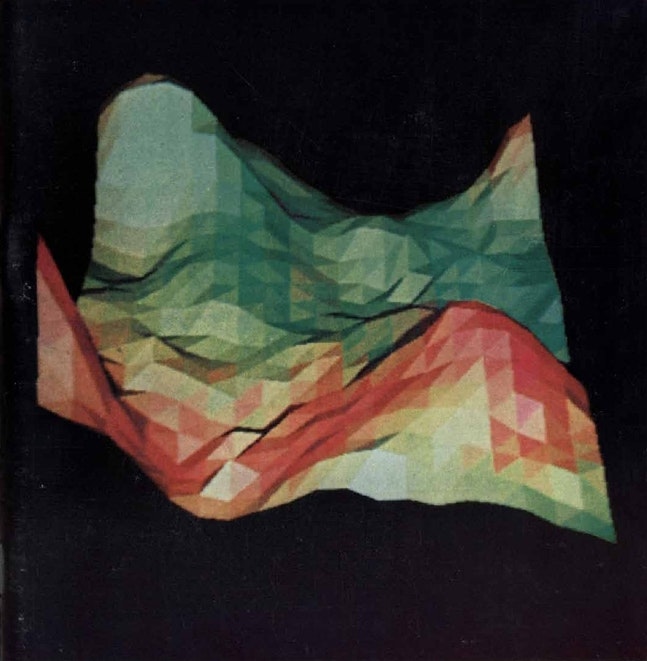

It was the physical limitations of these early displays that required this secondary mediation of the screenshot. While the sweeping motion of the electron beam across the screen of the oscilloscope allowed for these highly programmable smooth curves, its irregular movement also restricted the speed at which the beam could travel. This meant that these early screens couldn’t move fast enough to render complex or opaque shapes before the image would begin to fade, producing a noticeable flicker as the single beam struggled to refresh the phosphor on the face of the cathode ray tube. If researchers wanted to see the images they produced, they had to photograph their screens using customized oscilloscope cameras and Polaroid film. 4 An alternate technique for screen documentation used at AT&T Bell Laboratories and elsewhere was the Stromberg Carlson 4020 Microfilm Printer & Plotter. See Zabet Patterson, Peripheral Vision: Bell Labs, the SC 4020, and the Origins of Computer Art (Cambridge, Mass.: MIT Press, 2015). In long exposures over the course of several minutes, a single image would be etched, line-by-line, as the computer calculated the position and shade of each part of the object to be displayed. Much as with contemporary 3D rendering, producing these images was a process of exhaustive preparation and, to a degree, trial and error; though unlike contemporary methods these early graphics could not be seen without the use of analog photography.

All this raises an important question: why didn’t researchers simply hook their computers up to their television screens? After all, commercial television is as old as the modern computer, and would have allowed for more complex and realistic images to be rendered. The answer is quite simple: television has no memory. While we may not think of it this way any longer, television was designed to be a live medium, sequentially accessible, and without memory. Televisions do not store the images they display. They are vehicles for the display of information but they have no memory of the signals that pass through them. As such they cannot store and modify those signals for graphical interaction. They can only accept a signal of scan line data to be pushed out onto the display of a cathode ray tube from top to bottom and left to right. This feed must be constantly refreshed with new information if it is to remain active and visible, and its images cannot be paused, manipulated, or transformed by conventional means. Computation, however, is a process that relies on memory, and interaction is a process that requires the storage of information such that it can be acted on by a user. And so, it was not until the computer screen was supplemented in the 1970s with a memory buffer or bitmap, that the contemporary pixelated computer screen could come into being. This visual memory – originally called a “frame buffer” for the individual frames of information it held – is now the graphics card found in all personal computers, including the one on which you are reading these words. This is what makes the interactive display of a computer screen unique from other visual media forms, a screen that is non-sequential, randomly accessible, and stored in memory.

We see in this early period two distinct and somewhat contradictory uses for the screenshot as a technical practice. On the one hand the screenshot documents the potential of the computer as a dynamic and interactive system, shaping popular conceptions of what a computer is and can do. On the other we have the screenshot as a means of fixing or securing the act of computation by extracting out its visual representation, allowing computation to become a static object that exceeds the temporality of the electron beam that shapes it. In the coming weeks we will see both techniques uses extrapolated out as the screenshot moves from a technical to a vernacular practice.