Engineering Beyond Bias: It’s Time To Call the Experts

There’s a lot at stake when it comes to the growing role of algorithms in our lives. The good news is that a lot could be explained and clarified by professional and uncompromised thinkers who are protected within the walls of academia with freedom of academic inquiry and expression. If only they would scrutinize the big tech firms rather than stand by waiting to be hired. –Cathy O’Neil, “The Ivory Tower Can’t Keep Ignoring Tech”, New York Times, November 14, 2017

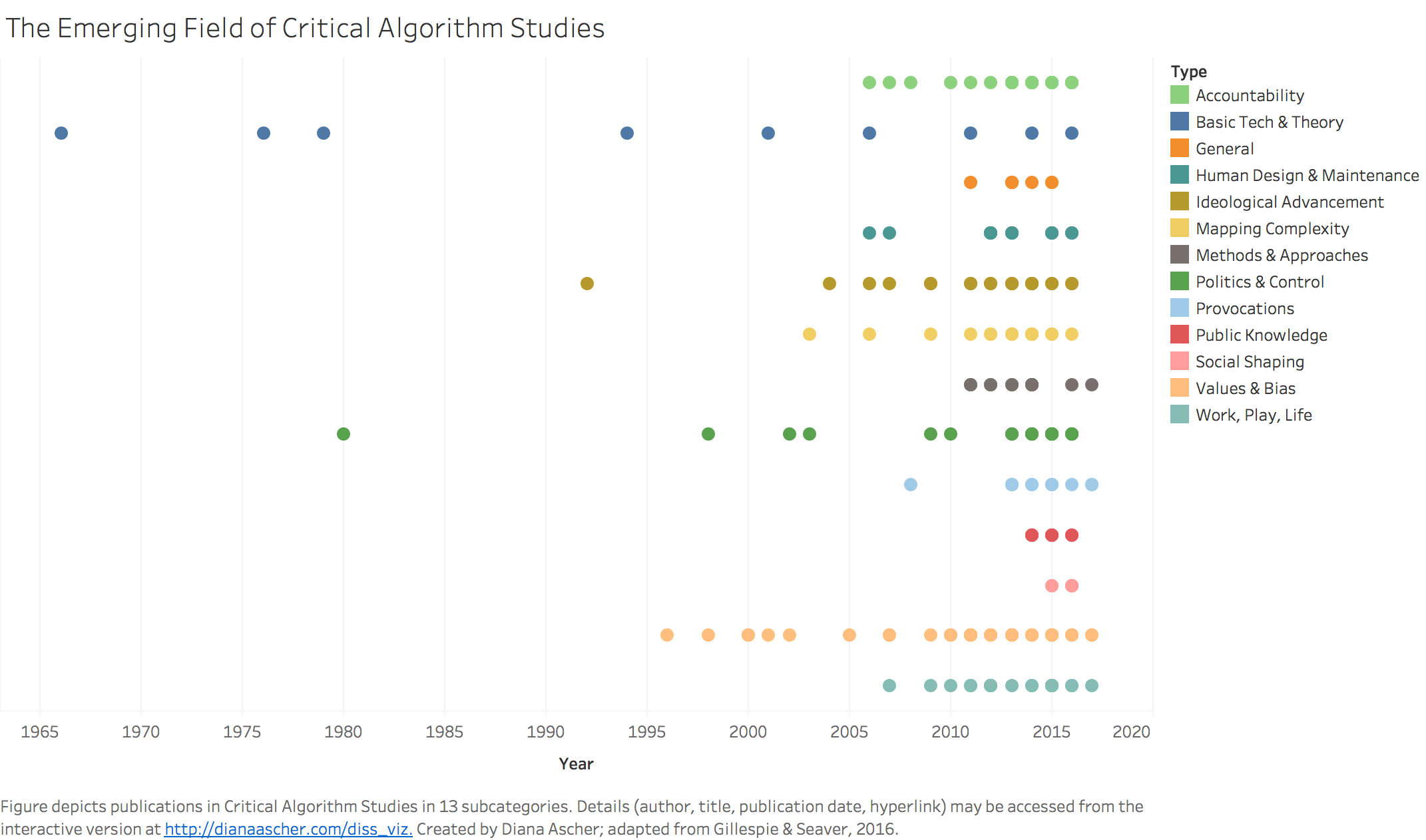

This month, data scientist Cathy O’Neil caused a twitter storm when she alleged that academics are “asleep at the wheel” when it comes to critiquing artificial intelligence and algorithms and their impact in society. Within 24 hours, academics from the United States and Europe began to weigh in with evidence to the contrary, citing studies, conferences, scholars, and academic departments that have given more than three decades to the study of such. While the backlash came swift and hard, the import of our collective concerns about the deepening social impact of algorithms in society is only just beginning to dawn on the general public and the developers of the systems that allocate power in contemporary society. There are, indeed, legions of scholars invested in studying the harms of digital technologies, and the consequences of their logics, and many of us showed up. Diana Ascher released an interactive infographic about the state of critical information science and the significant networks of scholars who are addressing issues of power, discrimination, bias, and even oppression in their work on digital technologies, which I found to be a powerful visualization of the breadth of our work:

The data show that many scholars care about these issues, and are dedicating their careers and lives to them. We care about the harm that comes from imposing new technologies into old patterns and systems of unequitable distributions of safety, security, and quality of life. We care about the consequences and affordances of the hyper-investments in technology projects that give us, as my colleague Sarah T. Roberts often says, the “illusion of volition.” It’s as if we should be satisfied with the outcomes of and our participation in the digitization of every aspect of human life.

The roll out of these technologies in society is increasingly about profits and market opportunity for tech companies, with little regard for the long-term social impact, which has been well documented by dozens of scholars. One of the more troubling dimensions of the scholarly research on race and tech is the way in which race or gender are engaged with, or theorized as, variables, data points, or “problems” that need to be solved in the development of technology products and projects. There is a tremendous amount of theorizing and speculation that inequality and oppression can be tweaked or programmed into a paradigm of fairness and social equity, with little regard for the broader social and economic systems of oppression in which these technologies proliferate. While we have more data and technology than ever, we also have a radical increase in social and economic inequality. We are living in an era marked by rollbacks of civil rights, and open misogyny – which, when intersected, negatively impact Black and indigenous women the most – and we are in a crisis of consciousness about enacting shared values after almost forty years of neoliberal social and economic policy. The largest transfers of wealth from working- and middle-class families is happening before our eyes, facilitated by digital capitalism, and obscured by an onslaught of disinformation about it on social media.

Indeed, Twitter has been buzzing with tweets about the many people of color and women activists and scholars who have been critical for many years of the ways that race, gender, and power are encoded in digital technologies. Their claims, like the ones I make in my book, Algorithms of Oppression, are a recognition and effort to intervene in the tech sector – which has long been oblivious to historical, social, and political dimensions of racist and sexist oppression--while leveraging and legitimating these systems to justify gender discrimination, pay inequities, and systemic racist exclusion in the hiring of African Americans, Latinx, indigenous and women employees in tech industries. The work of academics and journalists is drawing increasing attention to racial and gender bias, or what I call data discrimination in the sector. We see news headlines about the emergent use of software, or so-called “artificial intelligence,” to make important decisions, particularly in the judicial system. We see how white supremacists are harnessing the internet to promote racial apartheid in many nations, particularly the United States. We see women using the web to organize, mobilize, and resist tacit acceptance of misogyny, sexual harassment, and assault from national leaders in politics, business, and entertainment. We also see a replication of power dynamics at play as the concerns that have been championed by people of color are erased, while white scholars are increasingly credited for “discovering” bias, and calling for change.

I have been researching, writing, and talking about racist and sexist bias in algorithms nearly a decade. One of the points I consistently argue is that the structural dimensions of race, gender, and class are inextricably tied to one another, and are deeply embedded in digital technologies. Indeed, the entire telecommunications and digital networks are built into, and co-located in, historical patterns of exploitation and oppression. From the mining of minerals to support ubiquitous computing, the Internet of Things, and the massive global distribution of electronics, to the well-established e-waste cities of the west coast of the continent of Africa, these infrastructures of labor and commerce are replications of old colonial models of extraction and dispensability of Black people and other non-European, non-White people throughout the Global South. Closer to home, new products like Facebook Live, or Snapchat, have gained traction through their racialized use – whether in the circulation of videos of African Americans being killed by law enforcement, or in the allegedly playful promotion of skin lightening filters. Indeed, the entire ecosystem of the digital could not exist without pre-existing infrastructures of racialized oppression and its ideologies, which industry and nation-states tap to fuel their global supply chains for all things digital.

As we think about addressing some of these issues, particularly at the layer of software, I am thinking about a news article a colleague recently sent to me about a new industry lab that’s trying to tackle racial biases in AI by hiring Black computer scientists to investigate potential biases. I suppose the logic here is that racial bias can be fixed at a technical level by hiring talented programmers who allegedly better understand and can recognize offensive decision making by algorithmically driven automated decision making systems because of their personal, lived experiences in a racist society. There has been a tremendous push to diversify technology corridors like Silicon Valley by hiring more historically underrepresented programmers and data scientists, as if this alone would solve the problems of racist bias in technology products.

I believe, however, that people who study the protracted dimensions of racist and gendered systems of oppression are best qualified to think through these problems. One cannot design technology for society when one knows very little about the history of these systems: what they are, the logics of how they work, their fundamental ties to capitalism and patriarchy, and how they are implicated in every dimension of how we live, work, and create. In the context of the United States, the place to learn about these systems is in Black Studies, Chicano/Latino/a Studies, Asian-American Studies, and Native American or American Indian Studies programs. We can also learn about how gender and sexuality structure societies in Gender and Women’s Studies courses, and, increasingly, these academic departments take an intersectional approach to exploring the interwoven dimensions of power along the axes of race, gender, and sexuality. No computer science or engineering program with which I am familiar makes the research in these fields central to their degree programs. In fact, many future engineers can opt out of general education requirements in the humanities and social sciences through Advanced Placement (AP) testing in high school, meaning, they graduate from college with very little university-level education in the humanities, arts, and social sciences. I explore some of this in a forthcoming book, Valley Values: Silicon Valley's Dangerous Domination of Politics and the Public Imagination, co-written with Sarah T. Roberts.

What we need are new models of intervention to think beyond bias. We need to study the infrastructures of racism and sexism, and how these are embedded within the digital, and we need to foreground experts and scholars of race, gender and power to help reframe our approaches. Scholars of race and gender have been scrutinizing racialized capitalism, not much different than big tech firms’ practices, for over a hundred years. We need tech scholars, and the industry at large, to take notice and seek the expertise.